The deduplication duplicate detection enrichment prevents duplication of items. Items that it has already seen are rejected and not indexed.

| Enrichment name | deduplication |

|---|---|

| Stage | deduplication |

| Enabled by default | Yes |

Table of Contents

| Table of Contents | ||||

|---|---|---|---|---|

|

Overview

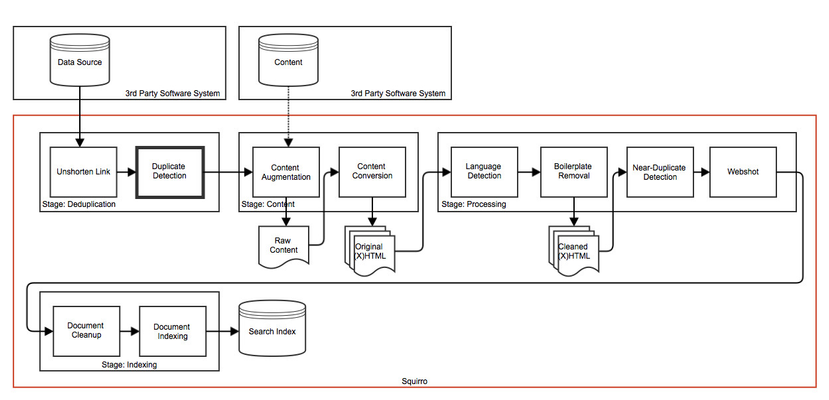

Items sent to Squirro come from different sources. Those sources sometimes send their data multiple times or in the case of Twitter mention the same site. To avoid having duplicates in the index, Squirro tries to detect identical items (duplicates) and nearly identical items (near-duplicates). This page describes the duplicate detection, near-duplicate detection is a separate enrichment.

There are two parts when it comes to the duplicate handling, the first one is the detection and the second one is the action taken upon that.

Detection

Squirro first searches for an item with the same external_id (see Item Format). If no previous item with the same external_id exists, the deduplication enrichment then searches the index for a document with the same title and link.

Items from a bulk source (e.g. created by the ItemUploader or DocumentUploader) are considered private. To avoid data leakage, the deduplication will only look at items from this source for deduplication.

Policy

Once an item is considered to be a duplicate it can be associated, replaced or updated, depending on the policy. For details on the policies see the configuration belowThe deduplication step is used to detect duplicate items. Duplicates are discarded and not shown to the user in the interface.

Duplicate handling has two parts. First there is the actual detection of duplicates. Second is the action executed once a duplicate is detected. The executed actions are specified using a policy.

Detection

The detection logic consults the index to find any items with the same properties (see Item Format) as the current item. The item properties for this lookup operation are configurable.

Unless a data source is considerate private (which is the case for sources created by the ItemUploader or DocumentUploader clients from the Python SDK), all sources of a project are included in the lookup. For private sources only the one source is used for the lookup. This behavior prevents data leakage between private and non-private sources.

Policy

If a duplicate item is detected a policy specifies which action is executed. The following table lists available policies and describes the executed action in detail.

| Policy | Default | Description |

|---|---|---|

associate | Default policy for user configurable sources (e.g. feed, Twitter)sources configured in the /wiki/spaces/KB/pages/2949421 user interface. | The item that is already in the Squirro index gets the additional source_id in its list of sources and the incoming item is discarded. |

replace | ItemUploader sets this policy be default Default policy for sources created by the ItemUploader or DocumentUploader clients from the Python SDK. | The existing item in Squirro is deleted and the incoming item is processed as is. This is mostly used for sources which deliver growing documents like support cases which are amended by additional comments. |

update | - | The existing item in Squirro is updated with all the fieldsproperties present in the incoming item. The values are replaced, so if thekeywords property is set, all existing keywords are overwrittenIndividual properties are replaced (e.g. existing |

Configuration

| Field | Default | Description | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| deduplication_fields |

| Which fields properties (see Item Format) to consider when searching for duplicates. Each sub-list is looked for individually and once the lookup operations stops as soon as a match has been is found, processing aborts. If a sub-list contains more than one fieldproperty, all of those fields properties need to match for an item to be considered a duplicate. | ||||||||

| policy | associate | The policy decides which action is taken on duplicates.

executed. See above for a table of available policies. | ||||||||

| provider_support | twitter | Comma-separated list of providers that have support for comments. For providers that support comments, all new comments are merged into the old existing item. |

Examples

To change the default policy, a processing_config needs to be passed to the DocumentUploader or ItemUploader:The following examples all use the Python SDK to show how the duplicate detection enrichment step can be used.

Item Uploader

The following example details how to update duplicate items.

| Code Block | ||||

|---|---|---|---|---|

| ||||

from squirro_client import ItemUploader # processing config to update duplicate items processing_config = { 'deduplication': { 'enabled': True, 'policy': 'replace',update', 'deduplication_fields': [ ['external_id'], ['link'], ['title'], ], }, } uploader = ItemUploader(…, processing_config=config) |

In the example above the processing

...

If the API is used directly pass a processing_config in the config dictionary of the source.

Caveats

...

pipeline is instructed to update duplicate items based on the external_id, link and title properties individually.

New Data Source

The following example details how to update duplicate items for a new feed data source.

| Code Block | ||||

|---|---|---|---|---|

| ||||

from squirro_client import SquirroClient

client = SquirroClient(None, None, cluster='https://next.squirro.net/')

client.authenticate(refresh_token='293d…a13b')

# processing config to update duplicate items

processing_config = {

'deduplication': {

'enabled': True,

'policy': 'update',

'deduplication_fields': [

['external_id'],

['link'],

['title'],

],

},

}

# source configuration

config = {

'url': 'http://newsfeed.zeit.de/index',

'processing': processing_config

}

# create new source subscription

client.new_subscription(

project_id='…', object_id='default', provider='feed', config=config) |

In the example above the processing pipeline is instructed to update duplicate items based on the external_id, link and title properties individually.

Existing Data Source

The following example details how to update duplicate items for an existing source. Items which have already been processed are not updated.

| Code Block | ||||

|---|---|---|---|---|

| ||||

from squirro_client import SquirroClient

client = SquirroClient(None, None, cluster='https://next.squirro.net/')

client.authenticate(refresh_token='293d…a13b')

# get existing source configuration (including processing configuration)

source = client.get_project_source(project_id='…', source_id='…')

config = source.get('config', {})

processing_config = config.get('processing_config', {})

# modify processing configuration

processing_config['deduplication'] = {

'enabled': True,

'policy': 'update',

'deduplication_fields': [

['external_id'],

['link'],

['title'],

],

}

config['processing'] = processing_config

client.modify_project_source(project_id='…', source_id='…', config=config) |

In the example above the processing pipeline is instructed to update duplicate items based on the external_id, link and title properties individually.